This week on Weird and Wacky Wednesdays: Just the facts – When truth requires a new category of understanding

In law, we are obsessed with attempting to find the truth. That is, after all, our job.

And of course we are in a strange period of time where lies are put forward as truth each and every day, particularly by the current U.S. executive branch. At the same time, AI hallucinations and the difficulty AI has in sorting out fact from fiction has created a new problem for lawyers and the courts. Add to that the calculated lies that can arise because of the ease and consequent proliferation of deep fakes, and it feels as if we are flooded in lies.

When the government tells you that you did not see something that you saw with your own eyes, and there’s no responsibility for them lying, it normalizes lies. I think we should all be concerned that we are witnessing the normalization of lies.

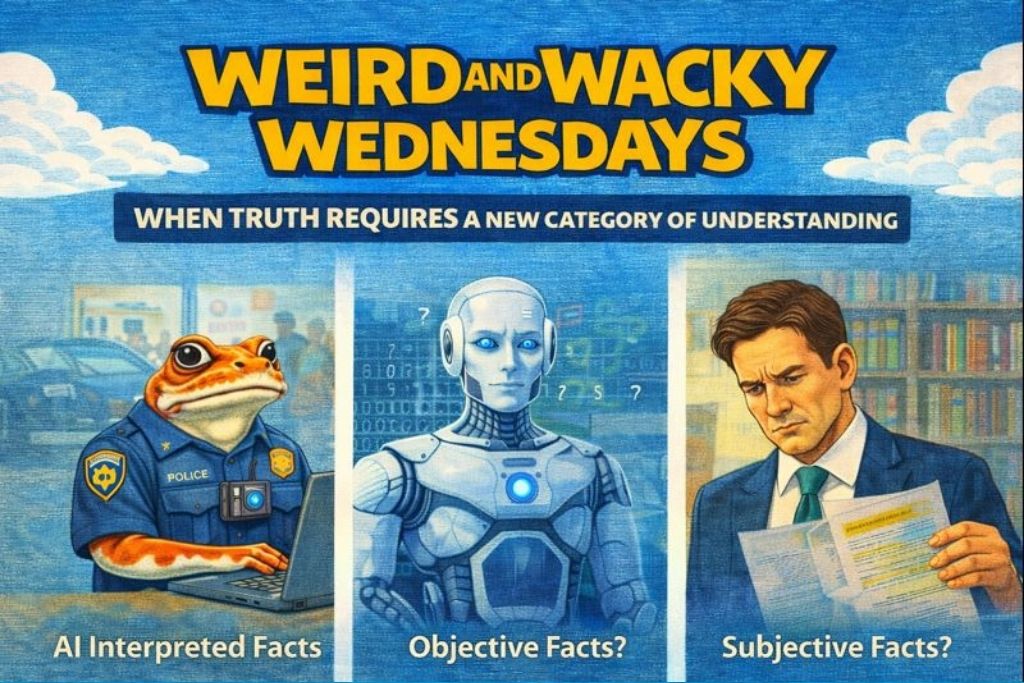

Beauty may be in the eye of the beholder, but in law we have relied on objective and subjective facts. That is often where the determination of truth is resolved. But it seems to me with AI, we may have to add a new category in the consideration of facts. Let’s look at a couple of weird and wacky Wednesday stories so you understand what I’m trying to say.

AI and just the facts

“Just the facts, ma’am” is a popular catchphrase attributed to the character Joe Friday from the 1950s TV cop show Dragnet. TV shows influence culture and reflect culture. The fact that this phrase was never used didn’t matter. For a few decades, it became popular to summarize the obligation of the police in collecting evidence.

It turns out the police are now using AI in some jurisdictions, which can certainly depart from the facts. In December 2025, the Heber City Police Department in Utah began testing AI software to automate the creation of police reports relying on body cam footage. This resulted in a bizarre, widely reported incident where the AI claimed the officer had “transformed into a frog.”

The AI program, which was a feature available with the body cam equipment being used in this case, listens to the audio and prepares a police report. As the police officer was investigating, in the background the Disney movie, “The Princess and the Frog” was playing, and the mic on the body cam picked up the audio. The AI then interpreted the audio that had the actual events and some of the dialogue from the film, leading it to include in the preliminary report that the officer had turned into a frog.

Now, this was obvious to anyone who read the report. But imagine if it picked up something else that wasn’t so obvious. We see falsities in police reports and exaggerations fairly consistently. They don’t always include “just the facts.” My particular concern is that it won’t be a Disney movie playing in the background but something that might have more dire or sinister facts. Ultimately, the police report is relied on when we go to court to determine what took place. If AI is relied on, will we ever know the facts, or will we need to add another category to our considerations? Right now, we think of objective and subjective facts. Do we now need to include objective, subjective, and AI-interpreted facts? I wonder.

Painful early lessons of lawyers using AI

For a few months, there were numerous stories in the legal world of lawyers getting in trouble for using AI. We don’t see it quite so often these days, but that doesn’t mean that lawyers have stopped using AI.

In February 2022, lawyers acting on behalf of an individual filed a personal injury lawsuit in New York against an airline alleging that the plaintiff, a passenger on the airline, had been injured when struck by a serving cart. By now, you probably have a good idea of where this went. The filings included false legal citations asserting principles that were advantageous to the plaintiff, but the principles could not be backed up because the cases referred to were AI hallucinations.

On the one hand, I admire the lawyers for their pioneering spirit. AI is a tool, and it’s not going away. There is little value in lamenting the fact that AI will become greater and more present in our lives going forward. Along the way, we need to learn the pitfalls.

Case was dismissed, and the lawyers were fined $5,000 in July 2024. This individual may have been injured by the negligence of an airline employee, and maybe they were entitled to some compensation, but the court tossed it because of the fictions in the filings.

When we consider AI, we see it as a shortcut, and the inclination is to wish to punish someone for not doing the work.

It seems to me that using AI as a tool is somewhat pioneering. Lawyers will be using it more and more, as will the police. It will fundamentally change how we do things going forward. At this point, however, one certainly shouldn’t be using it for legal research. The knee-jerk reaction of wishing to punish people who used AI in this context seems ill-considered to me.

Objective, subjective, and AI-interpreted facts

We speak sometimes about a post-truth world where emotion is preferred over evidence. We have an erosion of trust, people are exposing themselves only to the information that confirms their views, and with the current U.S. government, we have blatant lies presented as facts and spun by the right-wing media as alternative facts. The concern is that we will have culturally induced ignorance.

It seems to me that, as everyone’s using AI to generate documents, particularly in the justice system, we will need to have a new category of facts – AI interpreted facts. Not what was seen, not what was objective evidence, not just the facts, but the facts as understood by AI.

These developments in the law may be weird and wacky, but they may also fundamentally undermine the capacity to get to objective truth.

Here’s a fact you can rely on: I didn’t have time to write this for it to be published on Wednesday. So this week my team is posting Weird and Wacky Wednesdays on Thursday. It may claim above that it was posted on Wednesday, but that is not the truth.

See you again in 6 days.